Please go here for my games!

The Sun And Moon

I’ve been working on a commercial release of The Sun And Moon for the past few months. To keep up to date with my progress, please have a look at my development log here.

Ludum Dare #29

For Ludum Dare #29 I made a game called The Sun And Moon. It’s an action/puzzle platformer but I don’t want to say too much about it because I think it’s more fun to discover the game for yourself. I would love for you to check it out and tell me what you think!

In related news, I’m working on a full version with features such as:

- Controller support.

- Multiple resolutions as well as proper fullscreen support.

- Best times for each level with bronze, silver and gold goals.

- Loads of level.

- Way better audio.

If there are other things you want to see in a full version of The Sun And Moon please do let me know, I want to make this game as fun to play as possible!

Beaver Engine

I’ve taken Busy Busy Beaver, a game I made a month ago for the Bacon Game Jam 07, and stripped it down into a relatively simple engine, which I’ve dubbed the Beaver Engine. More about it soon!

Busy Busy Beaver Source

A few weeks ago I made a timelapse of the 48 hour development of Busy Busy Beaver. Obviously these sort of things are hard to follow so I wanted to eventually upload the source code, too. Well, finally, here it is!

The code hasn’t been cleaned up at all, so expect it to be pretty messy in many places. The main objects to look at are o_player and o_data. o_player controls the beaver himself in all the active levels while o_data holds the matrices storing all relevant level details. o_room_control handles the user interface, o_game_control handles miscellaneous things such as settings, room initialization and sound, and o_camera_control handles the view and parallax backgrounds. All the other objects are either relatively unimportant or should be self explanatory.

A few small things have been updated and the game now saves your progress, but otherwise the source more or less represents the 48 hour version.

If you use any of this source for your own game I ask that you please credit me (as Daniel Linssen). Please do not use this source for any commercial work without talking to me first.

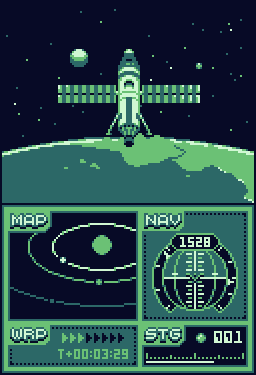

Kerbal Space Program, Gameboy Edition

A few weeks ago I re-imagined Kerbal Space Program as a Gameboy/DS game, using the four colors of a Gameboy and the two screens of a DS. This was the result.

The top screen is partially based off this picture. The bottom screen is a collection of most of the UI elements in the game: The map, navball, warp and stage.

Game Jam Rating Systems and Solutions

I was talking to a friend today about the problems which arise around game jam rating systems. Specifically, ways of encouraging the developers to give meaningful ratings and feedback to a large number of other entries. I refer entirely to online game jams with many entries, where it is difficult or impossible for every developer to rate every other game.

Why does it matter if games don’t get enough ratings?

It can be discouraging to have spent a weekend developing a game which you are really proud of only to have a small number of people play the game. In many game jams I’ve seen fantastic games with less than ten ratings, sometimes with less than five, and this can really dampen the experience since one of the most rewarding aspects of a game jam is having other people play and enjoy your game.

The other negative thing about a game jam where most games get very few ratings is that it makes the winners more and more arbitrary the less ratings there are. Different developers have very different rating methods. Some developers will give out 5s sparingly, saving them for what they consider the best of the best. Other developers will give out 3s, 4s and 5s in equal measure. This is fine when each game has been rated many times (twenty or more is a nice standard), but as the number of ratings drops you end up with more and more cases where a good game is given 5s by very lenient raters while a great game is given 4s by very strict raters (I want to mention that I don’t think there is anything wrong with any method of rating, so long as the method is consistent). This can lead to results which feel hollow or meaningless.

There is also a greater potential for malicious rating. Among other reasons, malicious ratings could occur due to personal reasons (Alice doesn’t like Bob and so gives Bob low ratings) or to game the system (Charlie gives everyone low ratings so that his game ranks higher). One rating out of many is drowned out, but one rating out of a few makes a great difference.

How can developers be encouraged to rate more games?

Different game jams have employed different techniques. Sometimes there is no encouragement. Sometimes there is a leaderboard of the top raters.

For Ludum Dare, a game’s visibility is boosted by the number of games the developer has rated (I’ll call this “involvement”) and dampened by the number of ratings it already has (I’ll call this “popularity”). This leads to very involved developers being very popular and very uninvolved developers being very unpopular. Rate thirty games and you can expect to be rated twenty times. Rate zero games and you can expect to be rated five times. The more you give to other developers, the more you get given. This system also partially solves the issue of web games getting a great deal more attention than downloadable games.

Personally, I think Ludum Dare’s rating system is fantastic and certainly the best rating system that I’ve seen but I think there may be additional things that can be done. I certainly don’t claim that the suggestions in the following sections are necessary or even good ideas. I would like to think of these suggestions as things to consider and discuss.

Additionally, I don’t want to sound like I don’t think developers can be trusted. On the contrary, I think that for the most part game jams produce outstandingly genuine and honest feedback and results. I simply like the idea of making such a system tamper proof. As a result, the discussion mostly focuses on the small number of developers who may not give meaningful ratings.

How do you encourage meaningful ratings?

By meaningful ratings I mean those where the rater played the entry for a reasonable length of time and then rated it thoughtfully. Opening a game and playing it for ten seconds before rating it is not a meaningful rating. Giving every game 3s is not a meaningful rating. Unfortunately, these sort of meaningless ratings are likely to arise whenever there is an incentive to rate other games. The majority of developers will rate meaningfully, of course, but there will likely be a few developers who aren’t interested in playing other games or are too busy to do so, and as soon as they are required to rate a certain number of games in order for their game to be visible or eligible for rankings, meaningless ratings are introduced.

An idea I had to encourage meaningful ratings is to measure how close a developer’s ratings are to the average ratings. The following method applies not only to game jams, but to any system based on mass peer reviews. To better illustrate this, consider the following ratings:

| Game Name | Mean Rating | Alice’s Rating | Bob’s Rating | Charlie’s Rating | David’s Rating |

| Rockety Rockets | 2.9 | 3 | 4 | 3 | 5 |

| Lazy Lasers | 3.2 | 4 | 3 | 3 | 2 |

| Heavy Hammer | 4.6 | 5 | 4 | 3 | 1 |

| Sharp Sword | 1.3 | 1 | 3 | 3 | 5 |

Alice’s ratings are very close to the average, Bob’s ratings are reasonably close, Charlie ratings are all the same and David’s ratings are either random or purposefully inverted. This closeness can be measured by the correlation coefficient, ρ. Here are the correlation coefficient values for the previous example:

| Name | ρ |

| Alice | 0.9802 |

| Bob | 0.6396 |

| Charlie | 0 |

| David | -0.8359 |

The closer to +1 the value of ρ is, the more accurately the developer’s ratings match the average rating. A value of 0 means there is no correlation at all (the rating is effectively perfectly random). A value of -1 means the developer’s rating is perfectly opposite the average rating. Looking at the first table, I would consider only Alice and Bob’s ratings to be meaningful ratings. The large, positive ρ values reflect this. Any meaningful rating should achieve a relatively high correlation coefficient. The more games each person rates, the more accurately the ρ value measures meaningfulness.

I think this calculation could be used effectively to measure the quality of a developer’s ratings, or to check which ratings are meaningful and which are not. Of course, any such checks would have to be very generous and give each developer a large margin to account for differences in opinion and taste. The system certainly wouldn’t want to discourage people from rating games in a way that reflects how they truly feel.

There are different ways this information could be used, and I think they fall into two distinct methods. Either reduce the influence that meaningless ratings have, or penalize games made those developers who rate meaninglessly. Unfortunately both methods come across as very negative, and either one would have to be finely tuned so as to not negatively affect any developers who have genuinely rated games, while still having some of its intended effect. It would depend on a few factors, such as the number of games rated, but as a rough benchmark I believe that most meaningful ratings will have ρ values above 0.6 with a few having ρ values between 0.5 and 0.6. If these values are accurate, then a ρ value of less than 0.4 could be considered likely meaningless.

More thoughts to come.

There is a lot more I want to say on this topic but for the time being I will leave it here. I will add more details as I find the time to do so. Also note that there is a good chance I have articulated some points poorly and that I may go back and revise things at a later date. In the meantime, if you have any thoughts or questions on the topic please join in the discussion by leaving a comment below.